Our need for more speed comes from our Java unit testing suite’s taking so long to run that we’re forced to choose between two rather bad options. Either we stare at the terminal screen until we lose the flow or we trigger off the build and task-switch to something else. There are two distinct areas where we can work to improve the speed of our feedback cycle: code and build. Lasse Koskela shows you how to speed up your Java test code.

Author: Lasse Koskela, http://lassekoskela.com/

This article is based on Unit Testing in Java, to be published in April 2012. It is being reproduced here by permission from Manning Publications. Manning early access books and ebooks are sold exclusively through Manning. Visit the book’s page for more information.

The essence of speeding up test code is to find slow things and either make them run faster or not run them at all. Now, this does not mean to say that you should identify your slowest test and delete it. That might be a good move, too, but perhaps it’s possible to speed it up so that you can keep it around as part of your safety net of regression tests. We will explore a number of common suspects for test slowness. Keeping this short list in mind can take us quickly to the source of slowness and avoiding the hurdle of firing up a full-blown Java profiler. Let the exploration begin!

The essence of speeding up test code is to find slow things and either make them run faster or not run them at all. Now, this does not mean to say that you should identify your slowest test and delete it. That might be a good move, too, but perhaps it’s possible to speed it up so that you can keep it around as part of your safety net of regression tests. We will explore a number of common suspects for test slowness. Keeping this short list in mind can take us quickly to the source of slowness and avoiding the hurdle of firing up a full-blown Java profiler. Let the exploration begin!

Don’t sleep unless you’re tired

It must seem like a no-brainer but if we want to keep our tests fast, we shouldn’t let them sleep any longer than they need to. This is a surprisingly common issue in the field, however, so I wanted to point it out explicitly even though we won’t go into it any deeper than that. The problem of sleeping snails and potential solutions to them can be summed up like this: Don’t rely on Thread.sleep() when you have synchronization objects that’ll do the trick much more reliably.

There. Now let’s move on to more interesting test-speed gotchas.

Beware the bloated base class

One place where I often find sources of slowness is a custom base class for tests. These base classes typically host a number of utility methods as well as common setup and teardown behaviors. All of that code may be an additional convenience for you as a developer who writes tests, but there’s a potential cost to it as well.

Most likely all of those tests don’t need all of that convenience-adding behavior. If that’s the case, we need to be careful not to accidentally amortize the performance hit of running that code over and over again for all of our tests in a large code base.

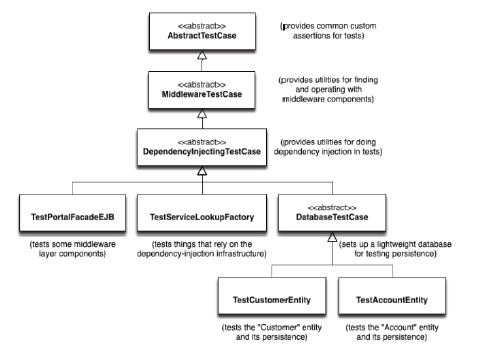

Let’s consider a scenario depicted in figure 1 where we have a hierarchy of base classes that provide a variety of utilities and setup/teardown behaviors for our concrete test classes.

Figure 1 A hierarchy of abstract base classes that provide various utilities for concrete test classes. Long inheritance chains like this can often cause unnecessary slowness.

What we have in figure 1 is abuse of class inheritance. The AbstractTestCase class hosts custom assertions general enough to be of use by many if not most of our test classes.

MiddlewareTestCase extends that set of utilities with methods for looking up middleware components like Enterprise JavaBeans. DependencyInjectingTestCase serves as a base class for any tests needing to wire up components with their dependencies. Two of the concrete test classes, TestPortalFacadeEJB and TestServiceLookupFactory, extend from here having all of those utilities at their disposal.

Also extending from the DependencyInjectingTestCase is the abstract DatabaseTestCase, which provides setup and teardown for persistence-related tests operating against a database. Figure 1 shows two concrete test classes extending from DatabaseTestCase: TestCustomerEntity and TestAccountEntity. That’s quite a hierarchy alright.

There’s nothing intrinsically wrong about class hierarchies, but there are serious downsides to having big hierarchies. For one, refactoring anything in the chain of inheritance may be much more laborious to carry out. Second, and this is crucial from our build speed perspective, it is unlikely that all concrete classes at the bottom of that hierarchy actually need and use all of those utilities.

Let’s imagine that our tests have an average of 10 milliseconds of unnecessary overhead from such as inheritance chain. In a codebase with ten thousand unit tests that would mean about a minute and a half of extra wait time. In my last project, it would’ve been three minutes. Oh, and think about how many times a day you run your build? Likely more than just once a day.

In order to avoid that waste, we should prefer composition over inheritance (just like with our production code) and make use of Java’s static imports and JUnit’s @Rule feature to provide helper methods and setup/teardown behaviors for your tests.

In summary, you don’t want your test to set up things you’ll never use. You also don’t want strange people crashing your party and trashing the place, which sort of leads us to the next common culprit of slow tests.

Be picky about who you invite to your test

The more code we execute, the longer it takes. That’s a simple way to put it as far as our next guideline goes. One potential way of speeding up our tests is to run less code as part of those tests. In practice, that means drawing the line tightly around the code under test and cutting off any collaborators that are irrelevant for the particular test at hand.

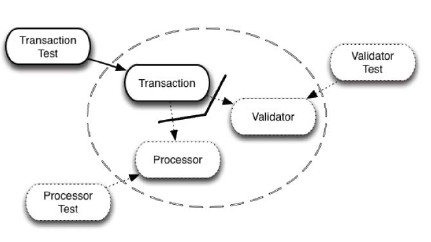

Figure 2 illustrates this with an example that involves three classes and their respective tests. Pay attention especially to the top left corner of the diagram: TransactionTest and Transaction.

Figure 2 Test isolation reduces the number of variables that may affect the results. By cutting off the neighboring components our tests run faster, too.

What we have in figure 2 is three classes from a banking system. The Transaction class is responsible for creating transactions to be sent for processing. The Validator class is responsible for running all kinds of checks against the transaction to make sure that it’s a valid transaction. The Processor’s job is to process transactions and pass them along to other systems. These three classes represent our production code. Outside of this circle are the test classes.

Let’s focus on the TransactionTest. As we can see in the diagram, TransactionTest operates on the Transaction class and has cut off its two collaborators, Validator and Processor. Why? Because our tests will run faster if they short-circuit a dozen validation operations and whatever processing the Processor would do if we’d let it – and because the specific validations and processing these two components perform are not of interest to our TransactionTest.

Think about it this way: all we want to make sure in TransactionTest is that the created transaction is 1) validated and 2) processed. The exact details of those actions aren’t important so we can safely stub them out for TransactionTest. Those exact details will be checked in ValidatorTest and ProcessorTest, respectively.

Stubbing out such unimportant parts for a specific test can save us some dear time from a long-running build. Replacing compute-intensive components with blazing fast test doubles will make our tests faster simply because there are fewer CPU instructions to execute.

Sometimes, however, it’s not so much about the sheer number of instructions we execute but the type of instruction. One particularly common example of this is a collaborator that starts making long-distance calls to neighboring systems or the Internet. That’s why we should try to keep our tests local as far as possible.

Stay local, stay fast

As a baseline, reading a couple of bytes from memory takes a fraction of a microsecond. Reading those same bytes off a web service running in the cloud takes at least 100,000 times longer than accessing a local variable or invoking a method within the same process. This is the essence of why we should try to keep our tests as local as possible, devoid of any slow network calls.

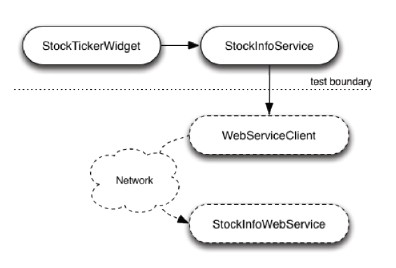

Let’s say that we’re developing a trading floor application for a Wall Street trading company. Our application integrates with all kinds of backend systems and, for the sake of the example, let’s say that one of those integrations is a web service call to query stock information. Figure 3 explains the relationship among the classes involved.

Figure 3 Leaving the actual network call outside the test saves a lot of time.

What we have here is the class under test, StockTickerWidget, calling a StockInfoService, which uses a component called WebServiceClient to make a web service call over a network connection to the server-side StockInfoWebService. When writing a test for the StockTickerWidget, we’re only interested in the interaction between it and the StockInfoService, not the interaction between the StockInfoService and the web service.

Looking at just the transport overhead, that first leg is about one microsecond, the second leg is about the same, and the third might be somewhere between 100 and 300 milliseconds. Cutting out that third leg would shrink the overhead roughly…well, a lot.

Substituting a test double for StockInfoService or WebServiceClient would make the test more isolated, deterministic, and reliable. It would also allow us to simulate special conditions such as the network being down or web service calls timing out.

From this point of view, however, the big thing here is the vast speed-up from avoiding network calls, whether it’s to a real web service running at Yahoo! or a fake one running in localhost. One or two might not make much of a difference, but, if it becomes the norm, you’ll start to notice.

To recap, one of our rules of thumb is to avoid making network calls in our unit tests. More specifically, we should see that the code we’re testing doesn’t access the network because calling the neighbors is a seriously slow conversation.

A particularly popular neighbor is the database server—the friend you always call to help you persist things. Let’s talk about that next.

Resist the temptation to hit the database

As we just established, making network calls is pretty costly business in terms of test execution speed. That’s only part of the problem, however, for many situations where we make network calls; many of those services are slow in themselves. A lot of the time, that slowness boils down to further network calls behind the scenes or file system access.

If our baseline were a fraction of a microsecond from reading data from memory, reading that same data from an open file handle would take roughly 10 times longer. If we include opening and closing the file handle, we’re talking about 1000 times longer than accessing a variable. In short, reading and writing to the file system is slow as molasses.

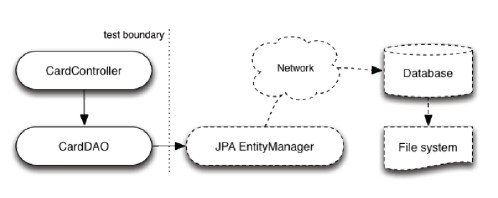

Figure 4 shows a somewhat typical setup where the class under test, CardController, uses a data access object (DAO) to query and update the actual database.

Figure 4 Bypassing the actual database call avoids unnecessary network and file system access

The CardDAO is our abstraction for persisting and looking up persisted entities related to debit and credit cards. Under the hood it uses the standard Java Persistence API (JPA) to talk to the database. If we want to bypass the database calls we need to substitute a test double for the underlying EntityManager or the CardDAO itself. As a rule of thumb, we should err to the side of tighter isolation.

It’s better to fake the collaborator as close to the code under test as possible. We say this because the behavior we want to specify is specifically the interaction between the code under test and the collaborator, not the collaborator’s collaborators. Moreover, substituting the direct collaborator lets you express your test code much closer to the language and vocabulary of the code and interaction you’re testing.

For systems where persistence plays a central role, you must pay attention to sustaining a level of testability with our architectural decisions. To make your test-infected lives easier, we should be able to rely on a consistent architecture that lets us stub out persistence altogether in our unit tests, testing the persistence infrastructure such as entity mappings of an object-relational mapping (ORM) framework in a much smaller number of integration tests.

Lightweight, drop-in replacement for the database

Aside from substituting a test double for your data access object, the next best thing might be to use a lightweight replacement for your database. For instance, if your persistence layer is built on an object-relational mapping framework and you don’t need to hand-craft SQL statements, it likely doesn’t make much of a difference whether the underlying database product is an Oracle, a MySQL, or a PostgreSQL.

In fact, most likely, you should be able to swap in something much more lightweight—an in-memory, in-process database such as HyperSQL (also known as HSQLDB). The key advantage of an in-memory, in-process database is that such a lightweight alternative conveniently avoids two of the slowest links in the persistence chain—the network calls and the file access.

In summary, we want to minimize access to the database because it’s slow and the actual property of your objects’ persistence is likely irrelevant to the logic and behavior your unit test is perusing. Correct persistence is something we definitely want to check for but we do that elsewhere—most likely as part of our integration tests.

Desperately trying to carry that calling the neighbors metaphor just a bit longer, we assert that friends don’t let friends use a database in their unit tests.

So far we’ve learned that unit tests should stay away from the network interface and the file system if execution speed is a factor. File I/O is not exclusive to databases, however, so let’s talk a bit more about those other common appearances of file system access in our unit tests.

There’s no slower I/O than file I/O

We want to minimize access to the file system in order to keep our tests fast. There’s two parts to this: we can look at what our tests are doing themselves and we can look at what the code under test is doing that involves file I/O. Some of that file I/O is essential to the functionality and some of that is unnecessary or orthogonal to the behavior we want to check.

Avoid direct file access in test code

For example, our test code might suffer from split logic, reading a bunch of data from files stashed somewhere in our project’s directory structure. Not only is this inconvenient from a readability and maintainability perspective, but, if those resources are read and parsed again and again, it could be a real drag on our tests’ performance as well.

If the source of that data is not relevant to the behavior we want to check, we should consider finding a way to avoid that file I/O. At the very least, we should look into reading those external resources just once, caching them so that we’ll suffer the performance hit just once.

So what about the file I/O that goes on inside the code we’re testing?

Intercept file access from code under test

When we look inside the production code, one of the most common operations that involve reading or writing to the file system is logging. This is quite convenient because the vast majority of logging that goes on in a typical code base is not essential to the business logic or behavior we’re checking with our unit tests.

Logging also happens to be quite easy to turn off entirely, which makes it one of my favorite build speed optimization tricks.

So how big of an improvement are we talking about here, turning off logging for the duration of tests? I ran the tests in a code base with the normal production settings for logging and the individual unit tests’ execution time ranged between 100 to 300 milliseconds. Running those same tests with logging level set to OFF, the execution times dropped to a range of 1 to 150 milliseconds. This happens to be a code base littered with logging statements so the impact is huge. Nevertheless, I wouldn’t be surprised to see double-digit percentage improvements in a typical Java web application simply by disabling logging for the duration of test execution.

Since this is a favorite, let’s take a little detour and peek at an example of how it’s done.

Disable logging with a little class path trickery

Most popular logging frameworks, including the standard facility, allow configuration through a java.util.logging configuration file placed into the class path. For instance, a project built with Maven quite likely keeps its logging configuration in src/main/resources. The file might, just for example, look a bit like this:

handlers = java.util.logging.FileHandler

# Set the default logging level for the root logger

.level = WARN

# Set the default logging level and format

java.util.logging.FileHandler.level = INFO

# Set the default logging level for the project-specific logger

com.project.level = INFO

Most logging configurations are a bit more evolved than this but it does illustrate how we might have a handler that logs any info level or higher messages to a file as well as any warnings or more severe messages logged by the third-party libraries we use.

Thanks to Maven’s standard directory structure, we can replace this normal logging configuration with one that we only use when running our automated tests.

All we need to do is to place another, alternative configuration file at src/test/resources, which gets picked up first because the contents of this directory are placed in the class path before src/main/resources:

# Set logging levels to a minimum when running tests

handlers = java.util.logging.ConsoleHandler

.level = OFF

com.mycompany.level = ERROR

The above example of a minimal logging configuration would essentially disable all logging except errors reported by our own code. You might choose to tweak the logging level a bit to your liking, for example, to report errors from third-party libraries or to literally disable all logging including your own. When something goes wrong, tests fail, and you want to see what’s going on, it’s equally simple to temporarily enable logging and rerun the failing tests.

Summary

We’ve now made note of a handful of common culprits for slow builds. They form a checklist of sorts for what to keep an eye out for if you’re worried about your build’s slowness. Keeping these in mind is a good idea if your feedback loop starts growing too long.

Pingback: Software Development Linkopedia March 2012

Pingback: Improving Java Unit Tests Speed